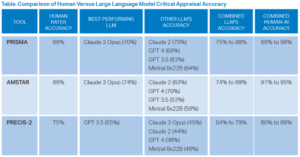

The collaborative human–AI approach yielded superior performance compared to individual LLMs, with accuracies reaching 96 percent for PRISMA and 95 percent for AMSTAR when humans and LLMs worked together.

Explore This Issue

ACEP Now: Jan 01Key Results

LLMs alone performed worse than humans; however, a collaborative approach between humans and LLMs showed potential for reducing the workload for human raters by identifying high-certainty ratings, especially for PRISMA and AMSTAR (see table).

Talk Nerdy to Me

- Bias in training data and prompts: LLMs rely on the data they were trained on, which may introduce unseen biases. In addition, the behavior of the model is impacted by the information it was fed—i.e. prompts. For example, when the LLMs were required to pull relevant quotes, they did not follow the instructions, often pulling too many or pulling quotes from analyses they had already performed, rather than the source material.

- Limited contextual understanding: LLMs lack the nuanced judgment required to assess the methodological quality of complex trials. This was illustrated by LLMs reporting low accuracy, while adding a human rater reported high accuracy. LLMs still don’t process in the same way as humans, but when we are the gold standard, is that something we want to match, or is there a benefit to re-evaluating our responses after the LLM goes through to see if we are making errors?

- Lack of transparency in LLM decision processes: Transparency in decision-making LLMs presents significant challenges. A key issue is the “black box” nature of these systems. This often makes it difficult to explain how they reach their decisions, even to experts. LLMs can generate sophisticated outputs from simple prompts, but the underlying reasons are opaque. AI often misunderstands or simplifies tasks, creating outputs that can be unpredictable and difficult to interpret and further complicating transparency. This raises concerns about trust in the LLMs’ results.

SGEM Bottom Line

LLMs alone are not yet accurate enough to replace human raters for complex critical appraisals. However, a human–AI collaboration strategy shows promise for reducing the workload for human raters without sacrificing accuracy.

Case Resolution

You start playing around with LLMs to evaluate the SRMA adherence to the PRISMA guidelines while verifying the accuracy of the AI-generated answers.

Remember to be skeptical of anything you learn, even if you heard it on the Skeptics’ Guide to Emergency Medicine.

Thank you to Dr. Laura Walker who is an associate professor of emergency medicine and the vice chair for digital emergency medicine at the Mayo Clinic, for her help with this review.

Pages: 1 2 3 | Single Page

No Responses to “Can AI Critically Appraise Medical Research?”